Neil here! This is my no-longer-weekly-help-procrastination-is-a-bane newsletter to my close friends! One of the main goals of the newsletter is to keep in touch with people I won't interact with as much anymore; you can do that with a short reply to this email. Apparently a lot of my friends read them, but I never hear about it because they don't respond; that's why any reply, even a single emoji, suffices. All past issues can be found here. Si vous ne parlez que français, utilisez Google Translate!

I.

Work on projects

I’ve met people who don’t do things because they think they don’t have the skills to do them. They'll tell me they're just high school kids; they don't know computer science, can't write properly, or aren't the kind of person who sends emails to people unprompted (even when all these would help them accomplish their goals).

I think this is the wrong way to think about it. You don't acquire skills, then act on something; you act on something then gain skills! Even then, there's something wrong with this reasoning. I believe it's the concept of "skills" in the first place.

From the inside, doing something extraordinary doesn't feel like having the skills to do something extraordinary then acting them out. It just feels like... acting. No one would say you have the "skill" to reach for your coffee mug and bring it to your lips—even though it definitely is a skill (one which you have and babies don't) which you had to acquire then act out. You just... do it, without thinking.

I wanted to make an anonymous compliment messaging system for my class, but didn't have any programming skills to speak of... so I asked ChatGPT to program it for me. I'm a little ashamed of this fact, because I hang around a lot of computer-science (CS) literate people and now I feel like an imposter among them. But 1) every programmer worth their salt is using ChatGPT to the extent it’s useful 2) I accidentally picked up skills by debugging the software again and again for hours until it worked 3) only the results matter, and if you continue just doing things instead of learning the skills first, you’re going to wake up one day with results and skills.

Work on projects, not on skills. (E.g. make a website or an app, don’t pick up CS class in university. You’re probably not even right as to which skills will be useful for you in the end; you could go through 6 months of CS class and later learn that 90% of what you learned is completely and utterly useless on a daily basis. Learn by doing.

I bet you’d be surprised how many direct results you could get tomorrow if you aimed at them directly instead of attempting to rack up the skills to get there later. This applies to the university→stable job→ financial security narrative as much as anything else.

(The part of you that’s scared of the real world will reject this advice instinctively. Look out and observe it closely.)

II.

Gratitude, systematized

I made a project! People have noted that the advice in the More Dakka post concerning gratitude was good:

More dakka is the simple step from "gratitude makes me feel good" --> "I should deliberately feel more gratitude". More more dakka is "I should deliberately feel twice as much gratitude". If gratitude worked once, why stop there? You should stop when you meet diminishing returns: but chances are you're not there yet.

I acted on this. Here’s a google form where you can note, at any time, three random things you’re grateful for. Once you complete the form it will send you an email with your submission in it. Whenever you feel down, you’ll just have to look up “gratitude form” in your inbox and you’ll find all that your past selves were grateful for.

You can also create your own version here, I made a template. You just need to click the "create" button and you'll have a perfectly functional Google Form of this kind.

Let me know if it's of any use to you!

III.

Situational Awareness

Want to give yourself imposter syndrome? A 22-year-old man named Leopold Aschenbrenner integrated Columbia University at age 15 in statistics/economics, and graduated as a Valedictorian 4 years later. He then worked at OpenAI’s Superalignment lab (whose primary goal was to ensure artificial intelligence is aligned with human goals) until he got fired, allegedly for publishing internal documents. [1]

[1] The lab no longer exists because everyone in charge of it left, worried about the “reckless culture” at OpenAI which might, according to most employees, bring about human extinction (gifted New York Times article here).

This section is about the following post, which is making waves on the AI scene. It's called "Situational Awareness" and it's about AGI.

“Artificial General Intelligence” (AGI) is a model which is at least as smart as a human at all cognitive tasks (read: anything a human can do via remote work).

If we build AGI, it will change everything. Running a computer that thinks just as well as a human but whose circuits are thousands of times faster than our brains and which can duplicate itself instantly... Imagine how abruptly the economy would morph. Currently, the big AI labs (like OpenAI) are explicitly aiming to build AGI, and openly admit they have no idea what's going to happen after that.

How far away is AGI, at our current rate of progress?

Leopold does the obvious thing: he extrapolates from past trends. In the last decade or so, progress in machine learning has been exponential at a consistent rate (read “AI has gotten consistently smarter year on year, and this trend has never broke”).

The main reason for recent drives in AI progress is simple: labs are using more computing power and more data to train their models. This is more dakka in action!

If you follow the line into the future—if you expect AI progress to go just as it has in the last 10 years—then we’re on target to reach AGI by 2027.

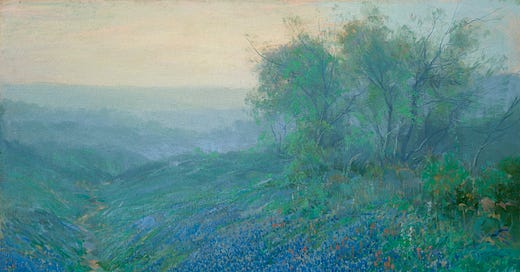

This graph is explained in detail in the actual post.

2-3 years doesn't sound reassuring at all! Even if AI progress were to slow down threefold for some reason we can't currently imagine, that's still superhuman AI within the decade! It's difficult to convey just how important this fact is.

The stock market does not reflect a world in which all cognitive work is automated within the decade. National security organisations are not treating AI as the most strategic technology of the 21rst century. The vast majority of ordinary humans are not living like their world will turn upside down (or even disappear altogether [2]!).

[2] We can't align AIs to human values yet, which is bad news if there are too many smart AIs all at once.

There'd be a lot more protests if that were the case, and microchip factories would probably be forcefully shut down (read this Time magazine opinion for more).

Anyway, the post (the first in a series) is good at conveying this. And remember: it was written by an OpenAI employee, and the majority of OAI employees agree that AGI will be at least as transformative as he describes—and their estimates for when AGI will arrive are about the same.

IV.

News

I’ve uploaded all past issues of the newsletter onto Substack. If you subscribe to the Substack, I’ll stop sending you emails the classic way.

I'm working on a paper in the context of the AI safety fundamentals governance course, an online program

I got through to the interview phase (after a battery of tests) in both the NonTrivial and ESPR programs, check them out if you like

I'm in the middle of exam season in France (this being my last year of high school). I'd love to say that's the reason it took me so long to send this email, but in trust my rate of procrastination simply increases linearly to the amount of people I add to the newsletter :(

I'm doing silly things instead of studying for exams

This was the 10th neilsletter. To unsubscribe, you'll have to defy me in 6-dimensional colorblind chess with multiverse timetravel and acausal trade (which is exactly as hard as it seems) OR mark my emails as spam. You can do that too.