[Hello everyone! This email is part of a feed where I update my close friends ∼ weekly. You may read it, skip it, delete it, or tape it to the wall and pray to it every night.

If you want to support me: reply with a one-sentence email, even if you didn’t read the entire update. You can literally just answer with “hello whee” and I’ll be happy.]

Increasing odds of survival:

There are two new promising avenues I just discovered.

The first is calling my congressional representative’s office and telling them about AI risk. The office finds it useful to have their registered voters call them and tell them about a specific bill they support. For them, it’s good evidence on what the voters want handed to them in a little ribbon. I’m told they’re very responsive. I’ll find whatever californian bill best serves the goal of shutting down AI capabilities research, and call them about it. Luckily for me, if I register as a voter (which I will do soon) I’ll be registered in exactly the part of the US where all the Doom Machines are being built.

The second is research on what changes people’s minds about AI risk. LessWrong— which is internet’s let’s-stop-the-Doom-Machines central—doesn’t have sufficient research on what intuitions the average person has on AI risk and how to make them care about it.

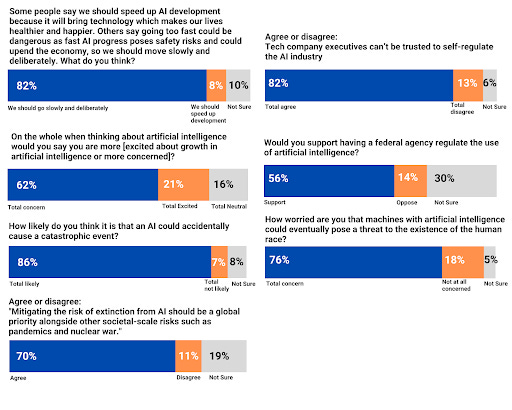

Here’s the research they have so far: this poll by the AI safety institute shows that most American voters have the correct intuition when it comes to building the Doom Machines. (The correct intuition is “stop building them”.)

But I still think there are some unanswered questions. I ran a survey in Magendie to find answers to them. I asked questions like “how much do you trust the people in charge of building A?I”, “how likely do you think extinction is?”, and more. In one group I asked whether they were generally pessimists or optimists in the world—and, no matter their answer, their probability of extinction was 20% higher than the other group’s! I have a hypothesis for why this is, and will test it soon.

I’ve also, in the same vein of doing research, sent a warning on my class group chat about extinction by AI. How would the class react? A few things I learned:

You have a set amount of weirdness points. Spend them wisely. I have many beliefs people would find weird but “AI will most likely kill us if we don’t shut down all capabilities research right now” is by far the most important.

I have already spent a lot of weirdness points in my class. I don’t know exactly what my reputation there is, but “sane” is not the global impression. The warning mostly slid off like water off a duck’s back (). [1]

For a few people though, it seems to have achieved its desired effect! In one instance, a friend of mine who’s generally more charismatic than I seems to have succeeded in normalizing my weird beliefs by speaking to someone else as a reaction to my warning. This evidence of backchannel conversations makes me hopeful!

I will think about everything I’ve learned from this and the survey and will publish it in a series of LessWrong posts soon. I’m working on them daily.

The Astronomical Waste project is also hobbling along at speed, and I will publish it Thursday after receiving final thoughts from the friends I asked feedback from.

Mutually exclusive habits

Okay the next part of the email is about productivity/optimization/self-help/all that jargon. You might find it useful for yourself.

I am severely annoyed by what seem to be “mutually exclusive habits”. I should have expected that taking on a new habit would erase an older one, given I am a finite being, but still… It's not fun to bump into this problem.

I used to write at least 2,000 words a day. I don’t anymore. I used to read 50 pages of Crime and Punishment, Dune, or The Fellowship of the Ring. Nowadays, I feel guilt at not reading anything.

These are all habits I would have loved to keep. But new ones replaced the old. I do more things these days, even when I’m still flawed and projects still sometimes take days more than expected. I’ve managed to make my thoughts more public than they used to be, through publishing blog posts on LessWrong or telling more friends about what I’m doing (how meta wow).

So… I can’t have it all? Perhaps, but there are some cheat codes I’ve discovered. First off, I’ve been growing more comfortable with the idea of publishing a horribly flawed draft of something. So writing I do in personal docs gets published more often. Second off, I’ve found an incredible escape from procrastination: I work on something, and when I inevitably get the urge to procrastinate, instead of opening YouTube or Twitter or Gmail, I open my book and read a chapter of my book.

As it turns out, there are a lot of random gaps in the day that I usually fill with “quick but useless tasks”, like scrolling. You know that feeling you get where you’re like “I deserve a 2 minute break, that’s too short to do something valuable like read from a book, so I’ll scroll instead” and then haha jokes on you your scrolling lasts 15 minutes instead of 2 and you could have spent that time on reading instead? Yeah, well it’s those pockets of whoops-it’s-15-minutes-actually that I’m exploiting in the name of finishing books.

Kirby’s encouraging comments have been of great help for me recently

Post of the week:

I’ve read something like 300 blog posts in my life (cf.sanity thing earlier). Choosing the most important ones is terribly difficult but I’ll choose one for the weekly update anyway as a recommendation to you. One is a lot better than zero.

“What a pessimistic containment strategy actually looks like” is one of the first LessWrong posts which popularized the idea of “avert doom by not building the Doom Machine instead of trying to build a slightly-less-doomy-machine before all the others”. [2] It also expresses the philosophy of “every tiny little gain is worth it”. It spells out my motivation for writing this email, and for all the other things I do.

(To be clear though, I’m not looking for tiny little gains for our odds of survival. Convincing a few more people about AI risk and running a few surveys is good, but not nearly as helpful as other potential actions I could do. It’s those actions I’m looking for.)

Ω

[1] Yesterday I was told I shouldn’t be sending long messages on the group chat because “c’est chiant”. I’d of said “well why do we even have a group chat if everything you don’t consider serious—like inside jokes or extinction from AI—is not appropriate for it?”, but then I don’t have to because I own that group chat and can moderate it however I want so MWAHAHA in your FACE!

[2] A lot of people in the ainotkilleveryoneist crowd think that our best chances of survival are if they get to Artificial General Intelligence first and stopped any other potential Doom Machines being built with it. They believe they are safer than their competitors, and so, paradoxically, rush ahead in capabilities in the name of safety! This post explains this clearly, but it’s not the post of the week.

Ω

[That’s all folks! You’ve been reading the Neil’s Updates Times. To unsubscribe, either mark my emails as spam or declare a ping pong duel with me and if you win I’ll stop sending you emails.

I encourage you to write your own version of this, because I’m curious about what’s going on in your life. Do it, even if it’s just a few sentences, even if it’s just for one or two friends. The mathematicians won’t tell you this, but there’s a huge difference between 1 and 0.]